The VMkernel is relying on the physical device, the pNIC in this case, to generate interrupts to process network I/O. This traditional style of I/O processing incurs additional delays on the entire data path from the pNIC all the way up to within guest OS. Processing I/Os using interrupt based mechanisms allows for CPU saving because multiple I/Os are combined in one interrupt. Using poll mode, the driver and the application running in the guest OS will constantly spin waiting for an I/O to be available. This way, an application can process the I/O almost instantly instead of waiting for an interrupt to occur. That will allow for lower latency and a higher Packet Per Second (PPS) rate.

An interesting fact is that the world is moving towards poll-mode drivers. A clear example of this is the NVMe driver stack.

The main drawback is that the poll-mode approach consumes much more CPU time because of the constant polling for I/O and the immediate processing. Basically, it consumes all the CPU you offer the vCPUs used for polling. Therefore, it is primarily useful when the workloads running on your VMs are extremely latency sensitive. It is a perfect fit for data plane telecom applications like a Packet GateWay (PGW) node as part of a Evolved Packet Core (EPC) in a NFV environment or other real-time latency sensitive workloads.

Using the poll-mode approach, you will need a pollmode driver in your application which polls a specific device queue for I/O. From a networking perspective, Intel’s Data Plane Development Kit (DPDK) delivers just that. You could say that the DPDK framework is a set of libraries and drivers to allow for fast network packet processing.

Data Plane Development Kit (DPDK) greatly boosts packet processingperformance and throughput, allowing more time for data plane applications. DPDK can improve packet processing performance by up to ten times. DPDK software running on current generation Intel®Xeon® Processor E5-2658 v4, achieves 233 Gbps (347 Mpps) of LLC forwarding at 64-byte packet sizes. Source: http://www.intel.com/content/www/us/en/communications/data-planedevelopment-kit.html

DPDK in a VM

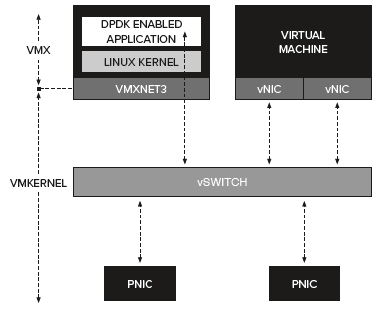

Using a VM with a VMXNET3 network adapter, you already have the default paravirtual network connectivity in place. The following diagram shows the default logical paravirtual device connectivity.

It is recommended to use the VMXNET3 virtual network adapter when you are using a DPKD enabled application. It helps if you have a pNIC that is also optimized for the use of DPDK. Since DPDK version 1.8 which was released in 2014, the VMXNET3 Poll-Mode Drivers (PMD) are included which contains features to increase packet rates.

The VMXNET3 PMD handles all the packet buffer memory allocation and resides in guest address space and it is solely responsible to free that memory when not needed. The packet buffers and features to be supported are made available to hypervisor via VMXNET3 PCI configuration space Base Address Registers (BARs). During RX/TX, the packet buffers are exchanged by their Guest Physical Address (GPA), and the hypervisor loads the buffers with packets in the RX case and sends packets to vSwitch in the TX case. Source: http://dpdk.org/doc/guides/nics/vmxnet3.html

When investigating a VM running a DPDK enabled application, we see that the DPDK enabled application is allowed to directly interact with the virtual network adapter.

Since Intel DPDK release 1.6, vSphere’s paravirtual network adapter VMXNET3 is supported. The emulated E1000 and E1000E virtual network adapters are already supported since DPDK version 1.3, however are not recommended. Using the DPDK enabled paravirtual network adapter will still allow you to fully use all vSphere features like High Availability, Distributed Resource Scheduling, Fault Tolerance and snapshots.

More information…

…can be found in the vSphere 6.5 Host Resources Deep Dive book that is available on Amazon!