In my current role, I am involved in a lot of discussions around network functions virtualization, a.k.a. NFV. Talking about NFV in this post, I mean telco applications. By that I mean applications specifically designed for and used by Communications Service Providers (CSPs) as core applications to, for instance, enable your (mobile) phone to actually be able to call another phone. 🙂

NFV with regards to telco applications is not that mainstream so it seems. The old school native way, having telco specific hardware running line cards, payload servers, etc., obviously is not sustainable looking at the current way we like to do ICT. On the other hand, it looks like telco application vendors are still finding their way on how to properly adopt virtualization as a technology. So it looks like the level of virtualization adoption for network functions is a few years back in comparison to IT application server virtualization.

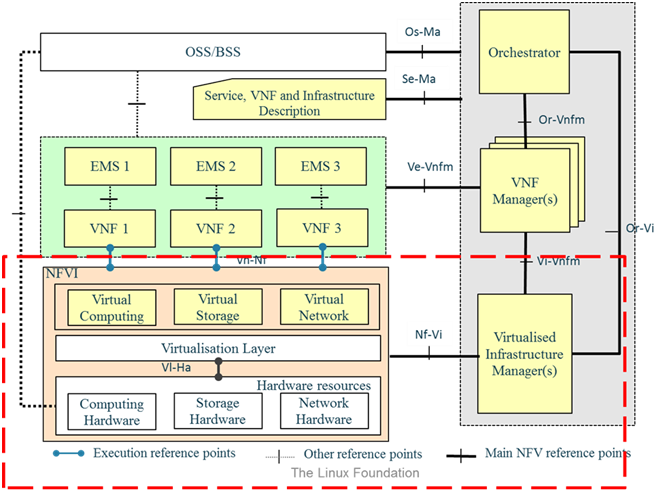

But development is rapid, and so it is for NFV. There already is a NFV Architecture Framework created by ETSI. ETSI was selected in November 2012 to be the home of the Industry Specification Group for NFV. The framework is a high-level functional architecture and design philosophy for virtualized network functions and the underlying virtualization infrastructure as shown in the following diagram:

Although there are words that NFV is mostly deployed using a KVM hypervisor working closely with OpenStack as the API framework for NFV, VMware is looking to hook into the needs of the communications service providers to properly ‘do’ NFV using VMware solutions. Hence the vCloud for NFV suite.

VMware vCloud NFV is a Network Functions Virtualization (NFV) services delivery, operations and management platform, developed for Communications Service Providers (CSPs) who want to reduce infrastructure CapEx and OpEx costs, improve operational agility and monetize new services with rapid time to market.

Let’s have a closer look at tuning considerations for vSphere to properly run NFV workloads!

NFV workloads

First of all, there is the understanding that NFV is not comparable with your typical IT application workloads. NFV workloads can be very demanding, realtime and latency sensitve… with great apatite for CPU cycles and network performance. Storage performance seems less important. Looking at NFV workloads, you could divide them into the following categories:

- Data plane workloads: High packet rates, requiring network performance/capacity

- Control plane workloads: CPU and memory intensive

- Signal processing workloads: Latency and jitter sensitive

vSphere and NFV

So let’s focus on the vSphere component. What are settings you should think about when serving NFV workloads using vSphere.

Standard sizing and over-commit ratios for compute resources won’t cut it. We’ve seen telco applications thriving by assigning more vCPU’s (linear performance scaling), thus leveraging HT. Other NFV application will greatly benefit from the latency sensitive setting within vSphere, thus claiming CPU cores. In the end it all comes down to be able to have a predictable NFV performance. that is the problem we are trying to solve here. vSphere allows for some tricks to ensure, as much as possible, predictability in performance.

BIOS

In order to ensure optimal performance from you host hardware. Power management should always be set to ‘high performance’. Other considerations with regards to CPU C-states and Turbo boost are noted in VMware documentation:

Servers with Intel Nehalem class and newer (Intel Xeon 55xx and newer) CPUs also offer two other power management options: C-states and Intel Turbo Boost. Leaving C-states enabled can increase memory latency and is therefore not recommended for low-latency workloads. Even the enhanced C-state known as C1E introduces longer latencies to wake up the CPUs from halt (idle) states to full-power, so disabling C1E in the BIOS can further lower latencies. Intel Turbo Boost, on the other hand, will step up the internal frequency of the processor should the workload demand more power, and should be left enabled for low-latency, high-performance workloads. However, since Turbo Boost can over-clock portions of the CPU, it should be left disabled if the applications require stable, predictable performance and low latency with minimal jitter.

Compute resources

Let’s start by fully reserve memory and CPU resources. So each NFV VM gets 100% dedicated compute resources. This will guarantee the VM’s ability to use the full resources entitled to it, but… you can imagine this will limit vCenter / vSphere to use the cluster resources as efficiently as possible. As it stands for now; Over-commitment is a not done for our NFV VM’s.

Worth telling is that some ‘strange’ behavior was noticeable during performance tests. It looked like the CPU scheduler within vSphere (5.5) was swapping the VM’s a lot between NUMA nodes when load was about 50%, causing some additional latency peaks. This test was done on a fairly basic vSphere configuration and I am not sure about the memory pressure of the test load. I will try to get some more information about how and why we saw this behavior in a future post.

(Advanced) vSphere configurations we are evaluating contain settings like:

- Enable NUMA for a specific VM with numa.vcpu.preferHT=TRUE

- Enable NUMA for a specific vSphere host with numa.PreferHT=1

- Enforce processor affinity for vCPUs to be scheduled on specific NUMA nodes, as well as memory affinity for all VM memory to be allocated from those NUMA nodes using numa.nodeAffeinity=0,1,…, (processor socket numbers)

- Reduce the vNUMA default value if application is aware / optimized. The default value is numa.vcpu.min=9 . So if you configure smaller VMs consider using numa.autosize.once=FALSE and numa.autosize=TRUE

vMotion

Another thing worth mentioning, is that some telco application vendors are still very wary of using vMotion. This is because a lot of telco applications have resiliency built-in which makes perfect sense. The mechanism used for heart-beating in those applications usually have triggers with very little room for a hickup in VM availability. So they verified that a vMotion and the short ‘stall’ involved, was enough to trigger an application or ‘node’ fail-over.

My advise here is to verify per case if this is actually accurate. vSphere DRS is often used in ‘partially automated’ mode for initial placement with regards to the (anti)affinity rules. But you can easily override this setting per VM (to change it to ‘fully automated’) when vMotion is perfectly possible for that VM. This will allow DRS for at least some automatic (re)balancing.

Network

It is important to know what limitations the distributed vSwitch could withhold. Think about possible limitations on the maximum kpps (kilo packets per second) per vSphere host. Taking the max kpps and the average packet size will get to an estimate on how much bandwidth it’ll be able to process. It is an assumption the (distributed) vSwitch will provide sufficient kpps / throughput capabilities, but it seems very hard to get verify this by determining it’s maximums per host. Tests are being performed to get more insight on this.

Intel DPDK, Data Plane Development Kit, for vSphere is also on the menu. It is a poll-mode driver. In a nutshell, it is a set of libraries and drivers for fast packet processing. First evaluations shows great gains in network performance.

You should also consider the use of VMware vSphere DirectPath I/O or SR-IOV that allows a Guest OS to directly access an I/O device, bypassing the virtualization layer. But beware of the consequences these settings will introduce such the impossibility to use HA, DRS, (storage) vMotion etc.

Other important considerations for network tuning in vSphere are:

- Does your physical NIC support NetQueue?

- Recommendation to configure NIC’s with “InterruptThrottleRate=0” to disable physical NIC interrupt moderation to reduce latency for latency-sensitive VMs.

- Tune the pNIC Tx queue size (default 500, can be increased up to 10,000 packets).

- Always use the VMXNET3 adapter.

- Should you disable virtual interrupt coalescing for VMXNET3?

- Is having only one transmit thread per VM a bottleneck for high packet rates?

- Should you use one Tx thread per vNIC by using the ethernetX.ctxPerDev = “1”?

- Best performance to place the VM and transmit threads on the same NUMA node.

To conclude…

Bringing it all together; it’s challenging to virtualize telco applications as you will need to dig deep into vSphere to get the performance required for those realtime workloads. There are a lot of trade-offs and choices involved. But that makes things interesting, right? 🙂

As for the future, one can imagine containerization could be a good match for telco applications. Combine that with automatic scaling based on performance/load KPI’s, and you will have yourselves a fully automated VMware vCloud for NFV solution. It will be interesting to see the future development of virtualization in the telecom industry!

Note: This post contains a lot of very interesting stuff I would like to have a closer at. For instance, CPU scheduling behavior, vSphere network tuning. I will definitely deep dive on those subjects in the near future.

A very good whitepaper to further explain some considerations and configurations is found here: http://www.vmware.com/files/pdf/techpaper/vmware-tuning-telco-nfv-workloads-vsphere.pdf