The (Dynamic) NetQueue feature in ESXi, which is enabled by default if the physical NIC (pNIC) supports it, allows incoming network packets to be distributed over different queues. Each queue gets its own ESXi thread for packet processing. One ESXi thread represents a CPU core.

However, (Dynamic) NetQueue and VXLAN are not the best of friends when it comes to distributing network I/O over multiple queues. That is because of the way Virtual Tunnel End Points (VTEP) are set up. Within a VMware NSX implementation, each ESXi host in the cluster contains at least one VTEP, dependent upon the NIC load balancing mode chosen. The VTEP is the component that provides the encapsulation and decapsulation for the VXLAN packets. That means all VXLAN network traffic from a VM perspective will traverse the VTEP and the receiving VTEP on another ESXi host.

Therein lies the problem when it comes to NetQueue and the ability to distribute network I/O streams over multiple queues. This is because a VTEP will always have the same MAC address and the VTEP network will have a fixed VLAN tag. MAC address and VLAN tag are the filters most commonly supported by pNICs with VMDq and NetQueue enabled. It will seriously restrict the ability to have multiple queues and thereby will possibly restrict the network performance for your VXLAN networks. VMware NSX now supports multi-VTEPs per ESXi host. This helps slightly as a result of the extra MAC addresses, because of the increased number of VTEPs per ESXi host. NetQueue can therefore have more combinations to filter on. Still, it is far from perfect when it comes to the

desired network I/O parallelism handling using multiple queues and CPU cores. To overcome that challenge, some pNICs support the distributing of queues by filtering on inner (encapsulated) MAC addresses. RSS can do that for you.

Receive Side Scaling

Receive Side Scaling (RSS) has the same basic functionality that (Dynamic) NetQueue supports, it provides load balancing in processing received network packets. RSS resolves the single-thread bottleneck by allowing the receive side network packets from a pNIC to be shared across multiple CPU cores.

Single VM receive performance was evaluated for Mellanox 40GbE

NICs. Without RSS, the throughput is limited to only 15Gbps for large

packets, but when RSS is turned on for the pNIC and the VM, the

throughput increases by 40%. The configuration also helps in the

small packet receive test case, and the throughput is increased by

30%. RSS helps if VXLAN is used because traffic can be distributed among

multiple hardware queues for VXLAN traffic. NICs that offer RSS have

a throughput of 9.1Gbps for large packet test cases, but NICs that do

not only have a throughput of around 6Gbps. The throughput

improvement for the small packet test case is also similar to that of

the large packet test case. Source:VMware performance blog.

The big difference between NetQueue and RSS is that RSS uses more sophisticated filters to balance network I/O load over multiple threads. Depending on the pNIC and its RSS support, RSS can use up to a 5-tuple hash to determine the queues to create and distribute network IO’s over. A 5-tuple hash may consist of the following data:

- Source IP

- Destination IP

- Source port

- Destination port

- Protocol

The ability by RSS to use hash calculations to determine and scale queues is key to achieve near line rate performance for VXLAN traffic. The behavior that a single MAC address can now use multiple queues is very beneficial for VXLAN traffic running over the VTEPs. Keep in mind RSS is not required for 1GbE pNICs, but it is a necessity for 10/25/40/50/100GbE pNICs for the same exact reason as it is for VMDq and NetQueue.

One of the interesting facts of RSS is that it is not able to direct the incoming network stream to the same CPU core on which the application process resides. That means if an application workload is running on one core, the incoming network stream using RSS can very well be scheduled on another core. This could result in poor cache efficiency if the used cores are running within different NUMA nodes. However, you could argue the real impact of this ‘limitation’.

Configuring RSS

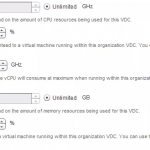

RSS is not enabled by default like its semi-equivalent VMDq. It needs to be enabled in the driver module. It depends on the used driver module if the RSS parameters should be set to enable it. The problem with the driver module settings is that it is not always clear on what values to use in the configuration. The description of the driver module parameters differs a lot among the various driver modules. That won’t be a problem if the value of choice is either zero or one, but it is when you are expected to configure a certain number of queues. The RSS driver module settings are a perfect example of this. Looking at the example of the ixgbe driver, the following description is given when

executing esxcli system module parameters list -m ixgbe | grep “RSS” in the ESXi shell.

The description is quite ambiguous: “Number of Receive-Side Scaling Descriptor Queues, default 1=number of cpus”

This implicates that you should configure RSS with a specific number of CPUs. But how can we determine what to configure? The same information about the b2xnx driver module is somewhat clearer: “Control the number of queues in an RSS pool. Max 4.”

The b2xnx example is more straightforward. You can configure up to four RSS queues per pNIC. However, how do you decide whether you need one, two or four queues? The result of the number of queues configured determines how many CPU cycles can be consumed by incoming network traffic. A higher number of queues will result in higher network throughput. But if that throughput is not required by your workloads, can configuring too many queues result in an inefficient way of consuming CPU cycles? That will depend strongly on how many cores you have available in your ESXi host. Obviously, the number of queues will have more impact on the CPU consumption with regards to the total CPU cycles available if you have eight CPU cores compared to an ESXi host that contains 36 cores. In the end, It is hard to determine what to configure. Let’s be realistic, there are too many variables when it comes to determining what settings to adopt for your (overlay) network utilization upfront.

More insights on how to fine-tune your environment after a Proof-of-Concept (PoC), or after you have taken the workload into production will be a more practical approach in most cases. That is because you will be able to monitor your workloads on (overlay) network performance and throughput. But it is important to understand that you can change the parameters when you are running into limits on network performance or throughput.

More information…

…can be found in the vSphere 6.5 Host Resources Deep Dive book that is available on Amazon!

Pingback: How to pick NICs for VMware vSAN powered HCI - Virtual Ramblings