Even though the jumbo frame and the possible gain and risk trade-offs discussion is not new, we found ourselves discussing it yet again. Because we had different opinions, it seems like a good idea to elaborate on this topic.

Let’s have a quick recap on what jumbo frames are. Your default MTU (Maximum Transmission Unit) for an ethernet frame is 1500. An MTU of 9000 is referred to as a jumbo frame.

Jumbo frames or 9000-byte payload frames have the potential to reduce overheads and CPU cycles.

Typically, jumbo frames are considered for IP storage networks or vMotion networks. A lot of performance benchmarking is already described on the web. It is funny to see a variety of opinions whether to adopt jumbo frames or not. Check this blogpost and this blogpost on jumbo frames performance compared to a standard MTU size. The discussion if ‘jumbo frames provide a significant performance advantage’ is still up in the air.

There are other techniques to improve network throughput and lower CPU utilization next to jumbo frames. A modern NIC will support the Large Segment Offload (LSO) and Large Receive Offload (LRO) offloading mechanisms. Note: LSO is also referenced as TSO (TCP Segmentation Offload). Both are configurable. LSO/TSO is enabled by default if the used NIC hardware supports it. LRO is enabled by default when using VMXNET virtual machine adapters.

Risks?

Let’s put the performance aspects aside, and let us look into the possible risks involved when implementing jumbo frames. The thing is, to be effective, jumbo frames must be enabled end to end in the network path. The main risk when adopting jumbo frames is that if one component in the network path is not properly configured for jumbo frames, a MTU mismatch occurs.

Your main concern should be if the network and storage components are correctly configured for jumbo frames. The situation that is interesting here, in my opinion, is the following one: I did a quick lab setup to capture the behavior of MTU mismatches in my IP storage (layer-2) network. The Guest OS in this scenario is a Windows 2012R2 VM running with an iSCSI LUN attached to it. I captured iSCSI (TCP 3260) frames on this VM on the dedicated iSCSI network adapter connected to the dedicated iSCSI VLAN.

I did a quick lab setup to capture the behavior of MTU mismatches in my IP storage (layer-2) network. The Guest OS in this scenario is a Windows 2012R2 VM running with an iSCSI LUN attached to it. I captured iSCSI (TCP 3260) frames on this VM on the dedicated iSCSI network adapter connected to the dedicated iSCSI VLAN.

My Cisco network switch is configured with a standard MTU size of 1500. So this is where the MTU mismatch occurs. Looking into the captured data, we immediately see TCP ACK unseen segments when doing writes to the LUN. Not what you want to see in your production environment. This can lead to misbehavior of your virtual machines and possible data corruption.

This behavior is expected though. Within a layer-2 network, the ethernet switch simply drops frames bigger that the port MTU. So you are silently black-holing traffic which exceeds the 1500 frame size. It is important to know that fragmentation will occur at layer-3, but not on layer-2 networks! This is a characteristic of layer-2 ethernet.

Typically, IP storage networks and vMotion networks are layer-2 networks (although vMotion now supports layer-3).

The same situation would occur if my storage interfaces on my NAS were mismatching in MTU. But, when I have the following situation…: …no harm would be done, because a frame of 1500 bytes simply fits very well in 9000. 🙂

…no harm would be done, because a frame of 1500 bytes simply fits very well in 9000. 🙂

Once I configured every component with jumbo frames / MTU 9000, we’re all good as the following capture shows:

To conclude…

To use jumbo frames or not to use jumbo frames, that is the question

Depending on the situation, I always used to implement jumbo frames where I saw fit. For instance, a greenfield implementation of an infrastructure would be configured with jumbo frames if the tests showed an improved performance. The risk that (future) infrastructure components are mis-configured, MTU-wise, can be mitigated by introducing strict change and configuration management along with configuration compliance checks.

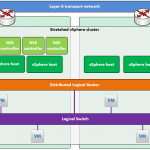

The funny thing is, while we are adopting more and more overlay services in our infrastructures, the MTU sizes must change accordingly. Think about your transport network(s) in your VMware NSX environment. Because of the VXLAN encapsulation, you will be required to ‘up’ your MTU to 1600.

So changing the MTU on your infrastructure components is no longer a question…

Thinking about network performance; It will be good to see the impact on network performance using the LRO/LSO offloading compared to, or in combination with, jumbo-frames. Let’s see if I can define some sensible tests on that…

Are there any concerns about dropping iSCSI and cluster communication from jumbo frames back down to a MTU of 1500 on a running config? I’m assuming if I connect to all the servers at the same time, edit the MTU of the NICs and hit APPLY quickly on all of the servers, all the servers will only be trying to communicate with standard packets. After that I’ll probably reduce the MTU on the switches just to have everything match up. Anything to worry about dropping down from jumbo to regular size frames? What order would you do this?

Well, that depends on the workloads and their I/O consumption to some extent. However, I would start with the source (ESXi host) > dest (array > network. Doing this will ensure the source will send out I/O requests with the lower MTU. Makes sense?

A very good article. Just wanted to share one point of view:

I believe based on the technology and the support for jumbo frames by switches in different manner the statement “Jumbo Frames must be configured correctly end-to-end. This means on the vSwitch, vmkernel interfaces, physical switches, endpoint – everything must be configured to support the larger frames end-to-end” is not accurate.

There are cases from a number of vendor deployments where physical switches can be segregated into switching domains and the term ‘end-to-end’ will confuse even the mature engineers and architects.

There are switch from so many vendors that was not exactly the case 5 years ago; that can even have different MTU for different port-channel / LACP groups.

Imagine a storage connected with a switch over a port channel and carry ISCSI Vlan that leads to a VMKernal on a vDS. If someone does not understand all component in this path having a larger MTU and confuses it with the need to have a large MTU for the whole switch; they might be in for a blocker when it comes to impement Jumbo frames.

Keep safe.

Cheers,

Imran

Is there anyone configuring any mechanism to pass on the Jumbo Frames to a network that can process it, instead of just dropping the frames?

Well, typically network switches are able to fragment packages. Meaning frames get divided in packages fitting to the default MTU size. That does however ‘break’ the performance benefit of jumbo frames.