Remote Direct Memory Access (RDMA) is an extension of the Direct Memory Access (DMA) technology, which is the ability to access host memory directly without CPU intervention. RDMA allows for accessing memory data from one host to another. A key characteristic of RDMA is that it greatly improves throughput and performance while lowering latency because fewer CPU cycles are needed to process the network packets.

RDMA (Remote Direct Memory Access) extends the capabilities of Direct Memory Access (DMA), allowing direct access to host memory without CPU intervention. This technology facilitates memory data access between hosts, significantly enhancing throughput and performance while reducing latency due to decreased CPU cycle involvement.

Traditional Data Path vs RDMA

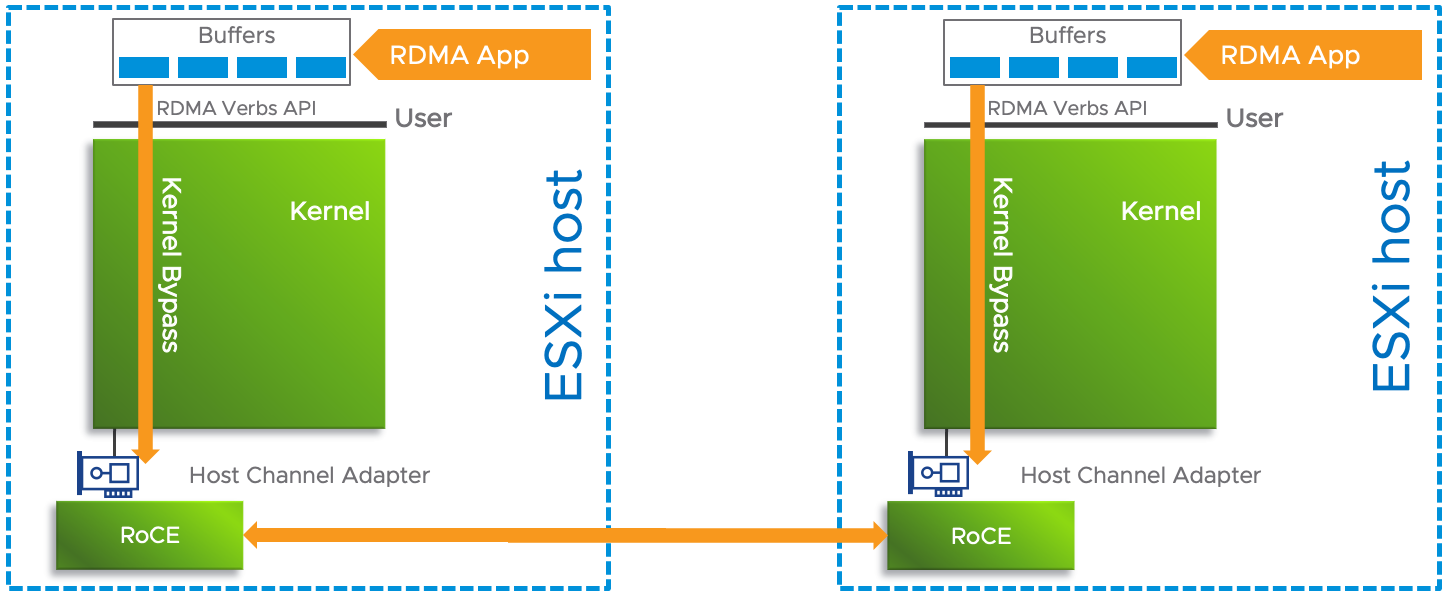

In a conventional network data path, applications navigate through buffers using the sockets API in user space. The kernel involves TCP, IPv4/6 stack, device drivers, and the network fabric, all demanding CPU cycles for processing. With today’s high-bandwidth networks (25, 40, 50, and 100 GbE), this poses a challenge, as substantial CPU time is needed. RDMA addresses this issue by bypassing the kernel, lowering network latency, and boosting data throughput with fewer CPU cycles. When the so-called communication path between the source and destination is set up, the kernel is bypassed. By doing so, the network latency is lowered while data throughput is increased because there are a lot less CPU cycles involved. The RDMA application speaks to the Host Channel Adapter (HCA) directly using the RDMA Verbs API. You can see a HCA as a RDMA capable Network Interface Card (NIC). To transport RDMA over a network fabric, InfiniBand, RDMA over Converged Ethernet (RoCE), and iWARP are supported.

When the so-called communication path between the source and destination is set up, the kernel is bypassed. By doing so, the network latency is lowered while data throughput is increased because there are a lot less CPU cycles involved. The RDMA application speaks to the Host Channel Adapter (HCA) directly using the RDMA Verbs API. You can see a HCA as a RDMA capable Network Interface Card (NIC). To transport RDMA over a network fabric, InfiniBand, RDMA over Converged Ethernet (RoCE), and iWARP are supported.

RDMA Support in vSphere

RDMA is fully supported in vSphere, allowing communication between ESXi hosts over HCAs. Examples of RDMA use in vSphere include iSCSI extensions over RDMA in vSphere 6.7 and NVMeoF using RDMA in vSphere 7. With vSphere 7 Update 1, native endpoints, such as those with storage arrays, are also RDMA-supported.

Using RDMA in a vSphere environment for virtual workloads is endorsed. High-Performance Computing (HPC) applications, database backends, and big data platforms are among the applications benefiting from increased I/O rates and lower latency with RDMA.

RMDA Support for Virtual Machines

Multiple options exist for exposing RDMA to virtual machines (VMs). The first option involves using (Dynamic) DirectPath I/O to pass through an HCA or RDMA-capable NIC directly to the VM. While this creates a 1-to-1 relationship for the most optimized performance, it restricts features like vMotion.

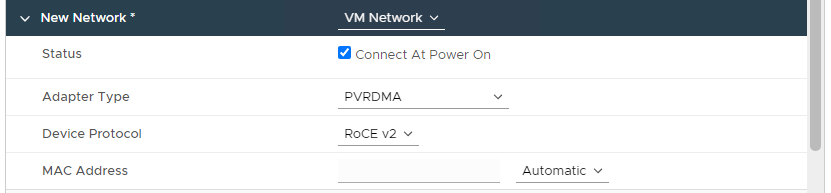

The second option, Paravirtual RDMA (PVRDMA or vRDMA), supports vMotion for workload portability. It requires VM Hardware version 13, introduced with vSphere 6.5 for x64 Linux guest OS. PVRDMA is configured with RDMA over Converged Ethernet (RoCE), supporting both v1 and v2. The difference is that RoCE v1 supports switched networks only, where RoCE v2 supports routed networks. The beauty of RoCE is that a ethernet fabric can be used. There’s not explicit requirement for a separate fabric, like with Infiniband. The ethernet fabric needs to support RDMA, primarily by supporting Priority Flow Control (PFC).

Configuring PVRDMA

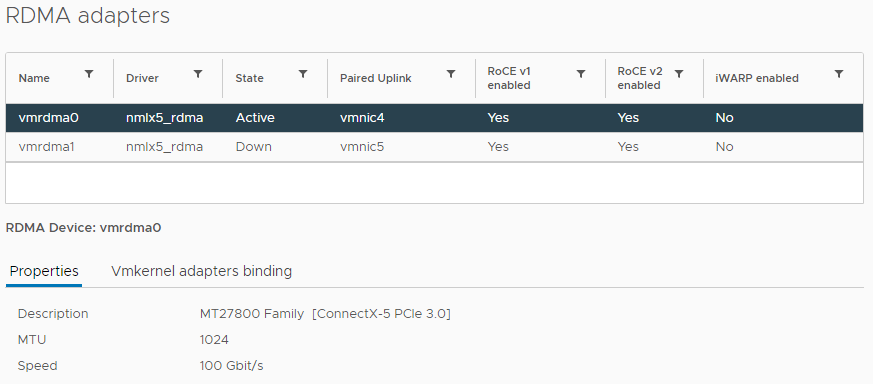

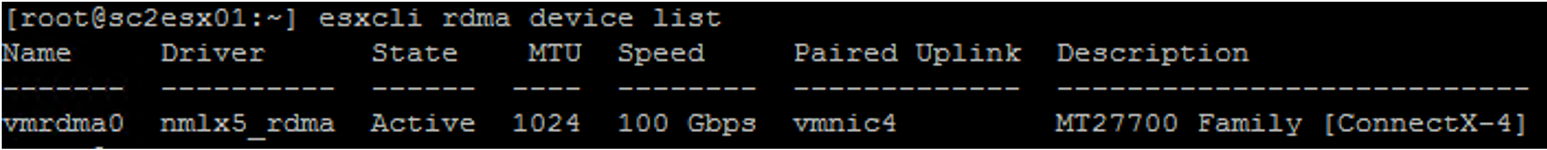

To configure PVRDMA for a VM, ensure the Ethernet fabric supports RDMA, and a host requires an HCA. Use the esxcli command esxcli rdma device list to check for RDMA-capable NICs or navigate to the vSphere Client for a visual representation.

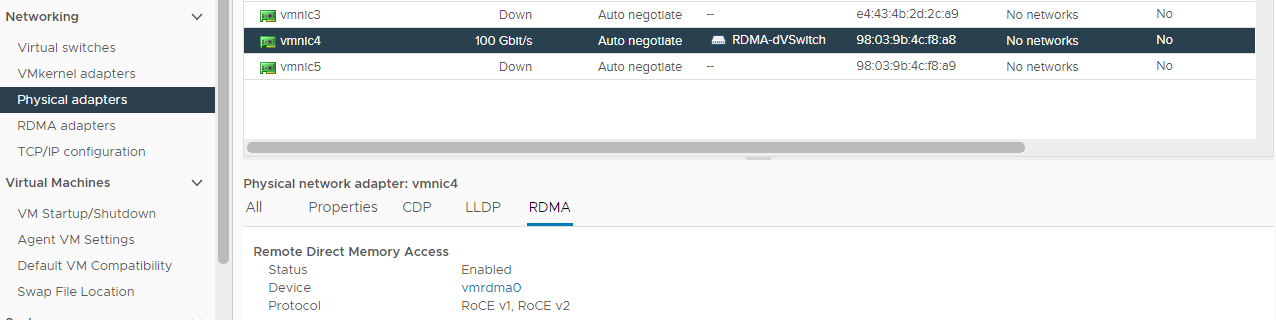

Go to an ESXi host > Configure > Physical Adapters. All the host’s network interfaces are shown with RDMA section, showing if RDMA is supported.

Note: Virtual Machines (VMs) located on separate ESXi hosts necessitate Host Channel Adapter (HCA) utilization for RDMA functionality. The HCA must be designated as the sole uplink for the Distributed vSwitch; however, NIC teaming is not supported with PVRDMA. In the vSphere Distributed Switch configuration, the HCA must serve as the exclusive uplink. Conversely, VMs residing on the same ESXi hosts or those employing the TCP-based fallback method do not require the HCA.

If RDMA is enabled, the system pairs the adapter with a physical uplink and binds it to a VMkernel interface.

For VMs, VM hardware version 13 is necessary, with the option to choose the PVRDMA adapter type when configuring a Linux x64 guest OS. Also notice the device protocol option for both RoCE v1 and v2.