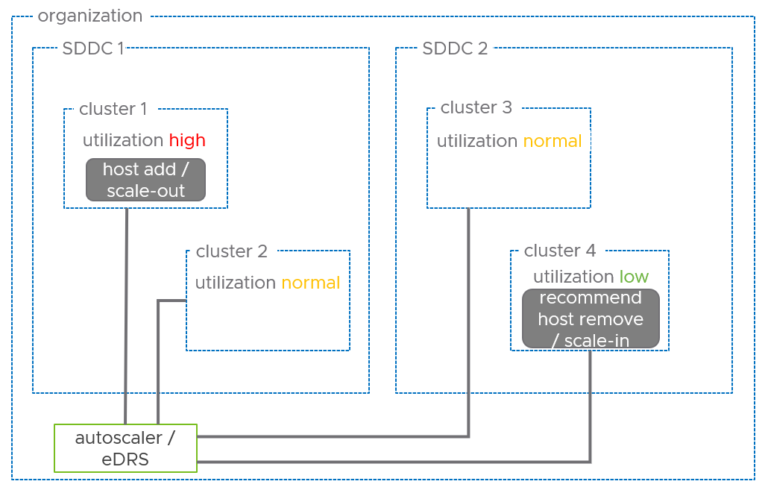

Better Resource Efficiency with Cross Cluster EDRS

The Elastic Distributed Resource Scheduler (EDRS) is a unique policy-based approach for cloud elasticty with VMware Cloud on AWS. It lets customers scale their VMware Cloud on AWS clusters according…